AI and agentic AI adoption is accelerating across industries, and executives are feeling the pressure—from boards, investors, and stakeholders—to safely leverage AI and agentic AI for efficiency and innovation. But the reality is, many employees are already using AI tools in their day-to-day work, often without clear guidelines or oversight. A recent Slack study underscores this point: desk workers at companies with AI permissions in place are nearly six times more likely to experiment with AI tools. Employees who receive AI training are up to 19 times more likely to report that AI is improving their productivity. In a similar Slack survey, it was found that without the necessary training and guidance, employees may not fully capitalize on the efficiencies gained from AI.

I’ve advised many organizations and customers on this issue. Countless companies are trying to figure out how to create an internal AI use policy for their organization, but there’s no universal blueprint. That’s why we’re sharing guidance here—to help companies develop and implement internal AI use policies that both enable employees to work faster and smarter, while also protecting their organization from risk.

Creating thoughtful internal guidance on AI use wasn’t just a compliance move — it was a leadership moment. We wanted to model what responsible use looks like in practice while enabling our team to leverage the best possible tools for innovating.

Jeremy Wight, Chief Technology Officer, CareMessage

Key benefits & considerations when creating an internal AI use policy

A strong internal AI use policy must balance innovation with responsible use. Here are some key focus areas to consider when crafting guidelines:

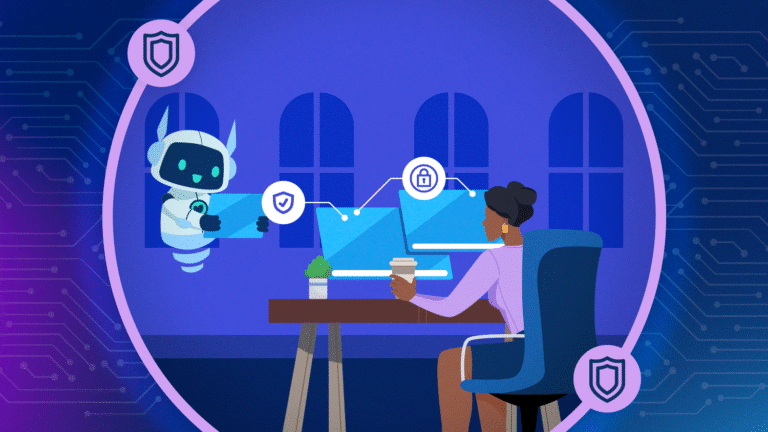

- Security & data protection: AI tools can inadvertently expose sensitive data, making data leakage and unauthorized access major risks. Policies should define clear boundaries on what data can be used in AI systems and ensure approved tools with encryption and access controls are in place.

- Regulatory compliance: Regulatory oversight of AI is rapidly evolving. Companies must ensure their internal AI usage at minimum aligns with the growing number of AI legal requirements, including the various U.S. state-level AI laws and the European Union AI Act. Some regulations prohibit certain AI use cases, require human oversight in AI decision making, as well as mandate bias assessments and transparency measures. A well-structured internal AI policy helps organizations stay ahead of compliance requirements by providing practical guidance for employees.

- Alignment with company values: Internal AI guidelines should also reflect the organization’s ethical commitments and corporate principles. For example, many companies link their internal AI use policies with other internal standards such as codes of conduct. At Salesforce, we are led by our values, including our Trusted AI principles , which also hold true for the agentic AI era. We use these principles to guide both our external and internal AI policy development, tailoring our employee guidance to be as practical and user-friendly as possible. Defining internal AI use policies within company values also fosters trust among employees and customers alike.

4 steps to develop internal AI guidelines

The most effective AI use policies don’t just focus on what employees can’t do —they provide clear guidance on what they should do. Here’s a practical four-step framework for developing internal AI guidelines:

- Assess current AI use and engage cross-functional teams

Organizations should evaluate how employees are already using AI in their daily work. Conducting an internal assessment will provide insight into existing and possible future AI adoption, helping identify potential risk areas, as well as areas of opportunity. Engaging legal, security, human resources, procurement, and engineering teams in the drafting process ensures the policy is comprehensive and considers compliance, security, ethical implications, and beyond. This will help organizations know where to provide more detailed practical guidance for areas of heavy use as well as those likely to brush up against legal and ethical requirements. Examples include (link to prohibited practices incoming blog) using AI to support employment decisions, the use of AI-generated videos, images and voice in marketing, or any instances where AI is interacting with sensitive company or customer data. - Provide actionable guidance for employees

A well-structured AI policy should encourage safe experimentation by highlighting approved and even encouraged use cases, tools, and workflows. Companies should provide AI tools that securely connect to internal data sources and carry out tasks while maintaining privacy. For example, the tool I use daily is Slack AI, with which I not only can safely query Einstein to edit my written work products and ideate, but also find, summarize, and organize all the important company information that lives within Slack. Agentforce in Slack further expands my capacity by scheduling team meetings, answering questions for my team, and much more. Providing AI tools with built-in guardrails can help ensure employees follow safe and ethical AI practices without unnecessary friction. - Establish ongoing monitoring and iteration

AI policies must remain dynamic as technology and global regulations evolve. Organizations should set a cadence for regular policy updates to reflect new AI capabilities, regulatory changes, and emerging risks. Implementing a structured feedback loop that allows employees to provide input on AI usage challenges can also help refine the guidelines over time. While procurement teams play a role in evaluating AI tools before adoption, companies should prioritize working with trusted vendors who invest in security and responsible AI development on their behalf. - Provide resources and training for employees

Organizations should establish clear channels where employees can find answers to AI-related questions, such as an internal AI Q&A agent. Additionally, directing employees to resources, such as AI training on Trailhead, our free only learning platform, and dedicating time for upskilling and learning will ensure workforces are ready for this new era of humans working together with AI. Investing in education fosters confidence in using AI tools responsibly while reinforcing the company’s AI governance framework.

Take the next step toward responsible AI use

As AI adoption grows, organizations must proactively define responsible AI use for employees in order to take advantage of the opportunities this technology has to offer. A well-crafted AI policy ensures compliance, protects data, and gives employees the confidence to use AI ethically and effectively across the organization.

Now is the time to create or review internal AI use policies, explore Salesforce and Slack AI tools designed with built-in trust and security, and invest in employee training through resources like Trailhead, the free online learning platform from Salesforce.